[ad_1]

Article Content

Introduction

Artificial Intelligence (AI) is revolutionizing the way music is produced, opening up new possibilities for creators and changing the way we listen to and interact with music. From songwriting and composing to mixing and mastering, AI is being used in a variety of ways to enhance the music production process. In this article, we will explore the various ways AI is being utilized in the music industry, the benefits it brings to the table and the potential challenges it poses. We will also look at some examples of how AI is being used in the music production process and what the future may hold for the intersection of AI and music.

The above paragraph was written by ChatGPT, the chatbot developed by OpenAI. It was in response to my prompt: “Write an introduction to an article called “Music Production and Artificial Intelligence (AI).”

The buzz around AI is seriously ramping up lately, driven by the release of online resources like ChatGPT , which uses an optimized language model and allows for conversational communication with users. It will write essays and detailed responses to user prompts that are grammatically correct and will not be flagged by plagiarism detection software (which is, ironically, AI-based) because it is generating original text. This has caused some alarm amongst educators concerned that students could take advantage of the new technology to avoid doing their own work. Predictably, this has spawned a whole sector of AI detection software like GPTZero.

In the visual arts, resources like DALL-E 2 will generate images based on user-supplied text. This raises all sorts of questions surrounding the idea of creative authorship and may shift the traditional model of artistic creation to an AI-based process in which the artist’s ability to provide inspired prompts becomes the work of art.

This sort of parametrical approach has been embraced by some and criticized by others. But in effect, it has been going on for some time. We all choose parameters at the start of any work regardless of artistic discipline. A painter chooses a canvas size and shape, a writer chooses a subject and perspective and a composer chooses a tempo, key, instrumentation, etc. What is perhaps different about using AI as a collaborator is that the computer will not deviate too far from the initial parameters — at least not yet. A human artist, on the other hand, will recognize serendipitous events as they unfold during the process and use them for creative effect. It is not at all unusual for creative work to take a drastically different direction based on human observation and decision-making throughout the process.

But using AI does not mean that the human element is necessarily relegated to generating the initial prompts alone. Altering the prompts and editing the results are still the responsibility of the artist, or at least they should be. While setting up a process and letting it ride untouched may be a conceptually pure implementation of AI, dogmatic approaches to artistic creation rarely yield positive results. It could be that it is precisely the “mistake,” or more accurately the “interesting mistake,” that’s the essence of human-made art.

Like it or not, music production will experience dramatic changes in the years (or even months) to come. AI audio tools and music generation software have been around for some time now, and AI implementations are proliferating while the quality of the results continue to dramatically improve. What follows is a description of just some of the software now available for audio and music production. But first, let’s identify some of the terms you might come across in your search for the perfect AI collaborator.

Terminology

Artificial intelligence (AI) – this term was coined in 1955 by computer scientist John McCarthy and refers to “the science and engineering of making intelligent machines.”

Machine learning – refers to the ability of computers to imitate human learning processes.

Deep learning – is machine learning that uses artificial neural networks (ANN) that attempt to simulate biological processes.

Supervised learning – is machine learning that requires human supervision to monitor and adjust the process.

Unsupervised learning – is machine learning that requires no human involvement.

Cybernetics “is the science of human-machine interaction that employs the principles feedback, control and communication.” It is often confused with AI but there are some important distinctions. Whereas “AI is based on the realist view that machines can work and behave like humans, Cybernetics is based on a constructivist view of the world. (source)

NOTE: “Constructivism is the theory that says learners construct knowledge rather than just passively take in information. As people experience the world and reflect upon those experiences, they build their own representations and incorporate new information into their pre-existing knowledge (schemas).” (source)

Algorithm – a set of instructions or mathematical formulae that produces an output based on user input.

Chatbot – uses a language-based model of artificial intelligence to ‘converse” with users.

Image recognition – allows computers to identify patterns in imagery based on machine learning.

Natural language processing (NLP) – is technology that allows computers to interpret and respond to written to verbal language produced by humans.

Generative adversarial network (GAN) – is the technology that allows computers to generate realistic images.

Mixing and Effects Processing

Perhaps some of the most interesting and potentially useable applications of AI in audio production can be found in mixing and effects processing software.

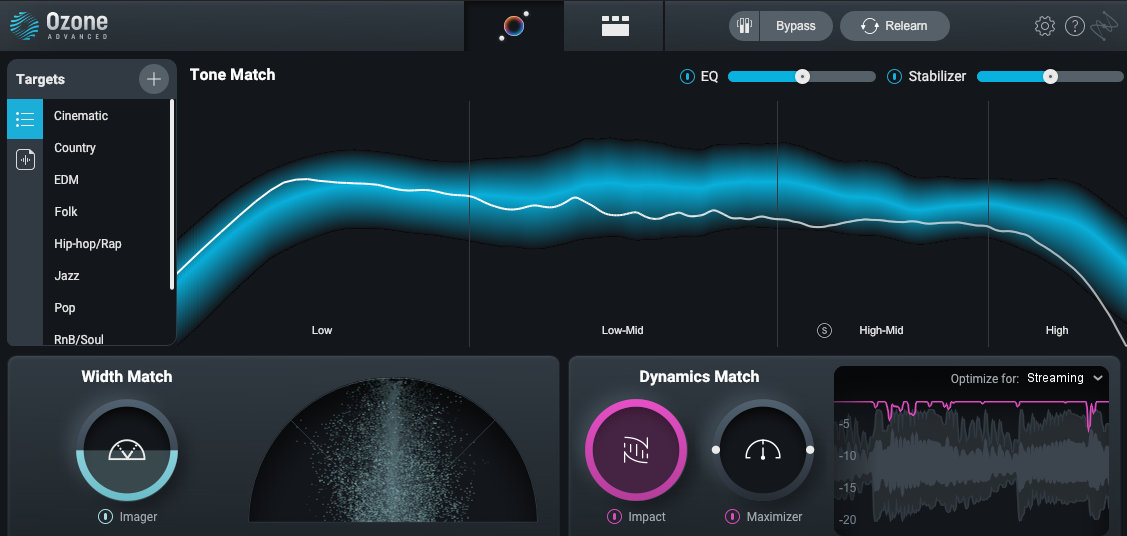

Ozone and Neutron by iZotope

The Ozone 10 and Neutron 4 effects suites include an “AI assistant” which analyzes your session and creates what it considers to be the optimal processing effects chain with settings based on genre. Does it work flawlessly? No. But it can potentially speed up your work by quickly loading plugins you may have used anyway. Or, it could suggest things you did not consider and serve as inspiration for an entirely new direction. As with many of these tools, there is the misconceived notion that the AI will do the work as well or better than an experienced engineer. That approach is doomed to failure. However, if you view AI as a collaborator, the results may be much more productive.

Sonible Plugins

Sonible offers a series of “smart” plugins including: Smart: EQ, Smart: Limit, Smart: Reverb and others. The Smart: EQ uses AI to “clean-up unpleasant resonances and unwanted notches, leaving you with a well-balanced sound — and therefore an ideal basis to take further creative steps.”

Chameleon by Accentize

Chameleon is “an intelligent audio plugin which uses artificial neural networks to estimate and model the exact reverb content of any source recording.” This can be a great post-production tool for matching the spatial characteristics of a space when recording ADR and Foley effects.

RX 10 by iZotope

Long revered as the preeminent post-production suite, RX10 has something they call Repair Assistant which will generate a chain of de-noising options based on the type of input, such as voice, music, percussion or sound FX. Although the result is rarely perfect, it does identify issues and potential solutions quickly, which can then be tweaked and refined as needed.

Online Mastering Services

Landr

LANDR is an online mastering service that broke new ground when it promised to master mixes with blazing speed at incredibly low prices when compared to the fees charged by a traditional mastering engineer.

eMastered

eMastered is another such service that claims to be made by Grammy-winning engineers and is powered by AI.

While it is doubtful that many successful artists with a reasonable budget would choose AI mastering over an A-list mastering engineer, these sorts of services definitely have their place for the home producer with limited funds. The systems are genre-specific and may not be appropriate for all types of music.

Demix and Remix Processing

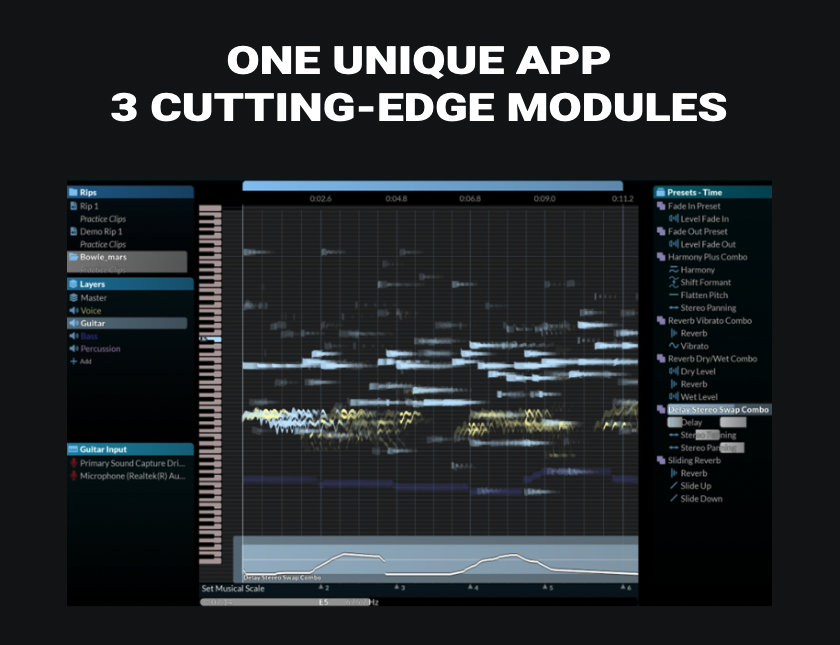

RipX by Hit’n’Mix

RipX includes three AI-based modules designed to separate and/or replace stems from mixed music files. The bundle includes:

DeepRemix – “splits full-mix stereo MP3s, WAVs, etc. into vocals, guitar, piano, drums, bass and other instruments.”

DeepCreate – “adds cutting-edge MIDI/MPE and audio recording to the RipX platform, plus lets you experiment with stems, sounds and samples in new and highly innovative ways.”

DeepAudio – “adds advanced stem clean-up and audio manipulation tools to the workflow, so you can create the highest quality extracted audio, and tweak/create sound at unprecedented levels of detail.”

The Sound of Pixels – MIT

This is some fascinating research being done at MIT that uses a neural network-based solution that identifies and separates the sound being produced by a particular instrumentalist in a video.

Music and Sample Generation

One of the most contentious areas of AI is content creation. This is where many traditional composers scoff and shake their heads in disgust. This could be a reaction to the often subpar or banal results generated by AI content creators. Or, maybe it’s the underlying fear of being replaced. But fear not humans, AI content creation comes in many forms and can be a useful tool in the right hands. In terms of banality, a quick tour of YouTube instructional videos will reveal an endless supply of terrible music created by humans, so why shouldn’t AI have a crack at it as well?

Arcade by Output

Many people are using Arcade and taking advantage of their ever-growing library of sampler instruments. But you can also use their Kit Generator to input your own samples. The software analyzes the sound and generates a unique kit complete with effects processing. You can change the way Arcade chops and slices your sound using four distinct modes. While there is no mention of AI-based procedures on the Output site, this process seems really smart to me.

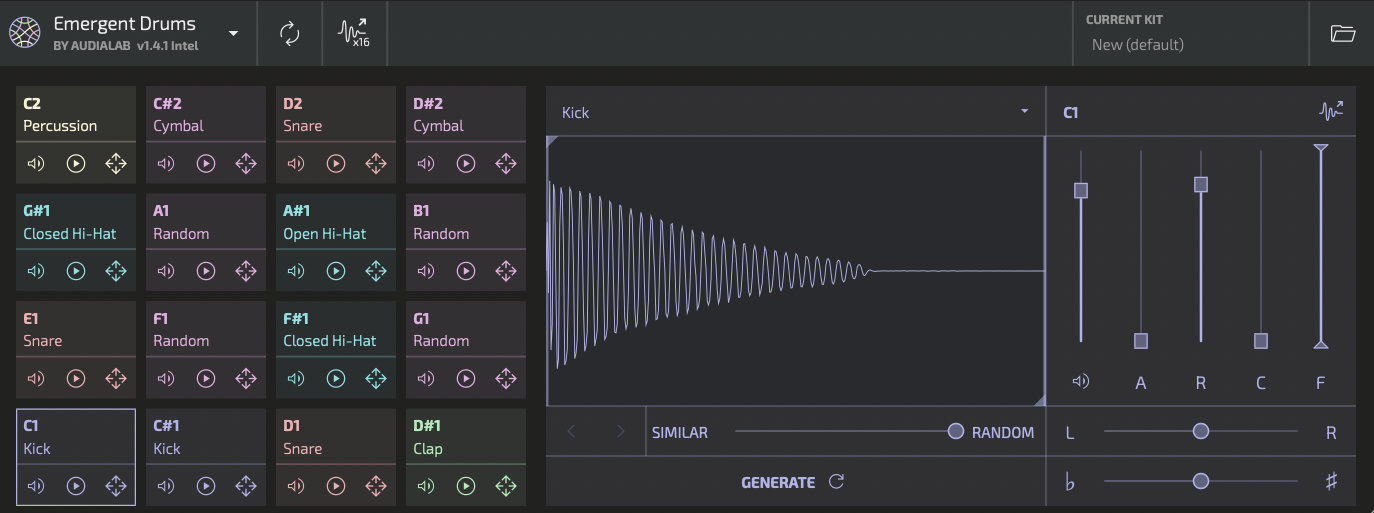

Emergent Drums by Audialab

This software uses AI to create drum samples from scratch guaranteeing unique royalty-free samples. The user can alter parameters at will to modify existing sounds on a continuum from similar to completely random. The GUI is a familiar drum-pad layout with additional controls for pitch, panning and amplitude envelope.

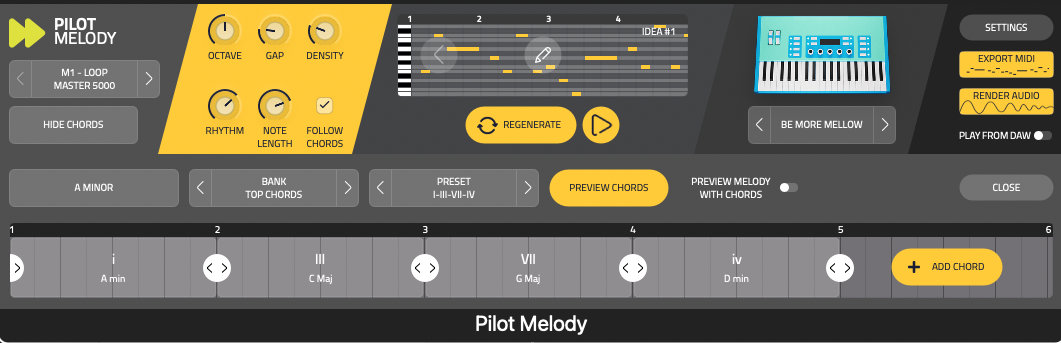

Pilot Plugins by Mixed in Key

These plugins include Pilot Melody, Pilot Bass and Pilot Arpeggio. They will create content based on a chord progression you can edit in the plugin GUI, or you can connect it to one of their other devices called Captain Chords. It seems to be oriented toward techno, future rave and house genres. The devices are very user friendly and provide intuitive parameters such as density, syncopate and note length — each can be altered on the fly. You can export content as MIDI or audio with drag-and-drop ease.

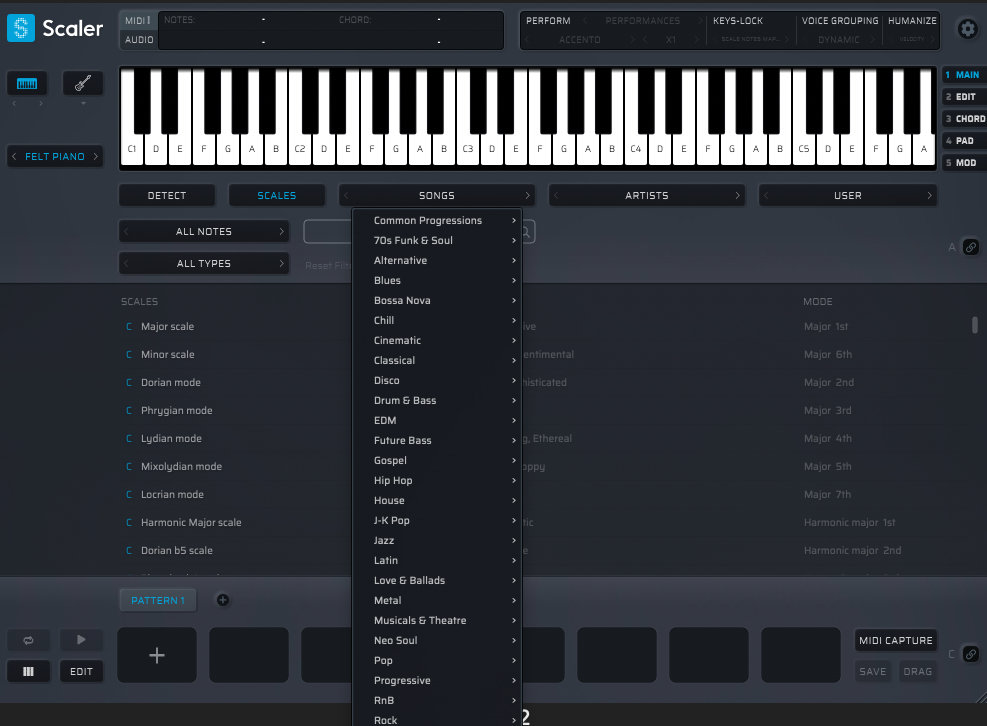

Scaler 2 by Plugin Boutique

Scaler can “determine what key and scale you’re in and suggest chords that match your music. The plugin helps you discover the perfect melody with performance expressions, and it can even suggest ways to change from one key to another.” It has a robust collection of content that includes chord sets, phrases, sequences, basslines, melodies, rhythms, modulation pathways and chord substitutions.

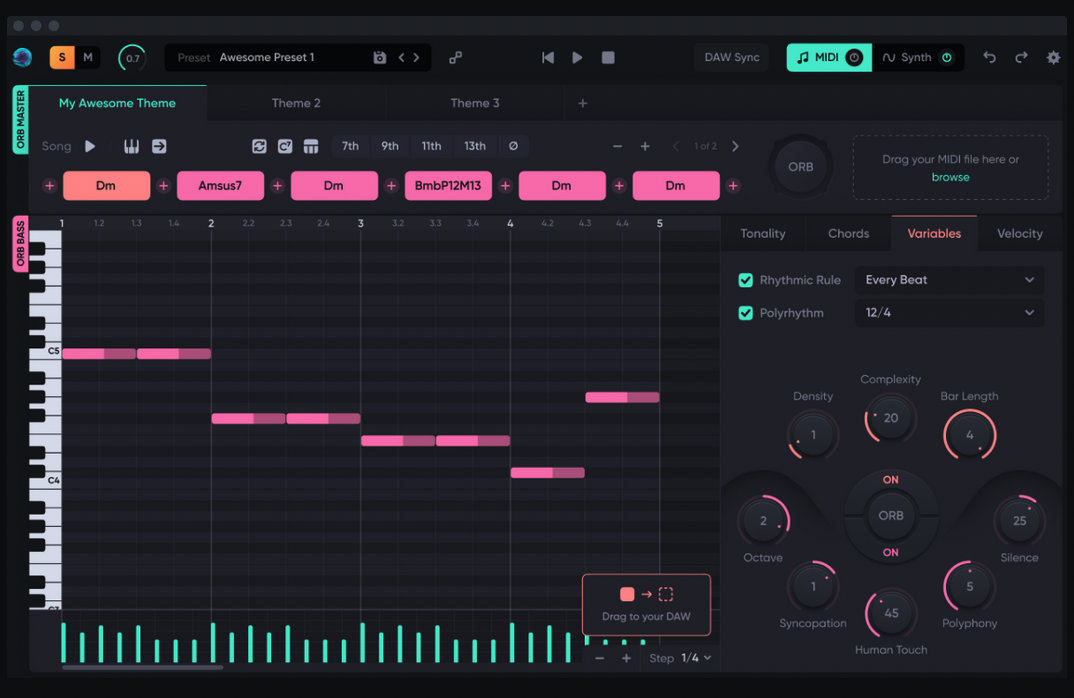

ORB Producer Suite by Hexachords

This software boasts AI computing capable of an infinite number of patterns, melodies and basslines. I wrote about the beginnings of the software then called Orb Composer back in 2018, and it has come a long way since then adding polyrhythms, block chaining (“lets you create one long piece of music from all of your themes for continuous playback at the click of a button”) and more.

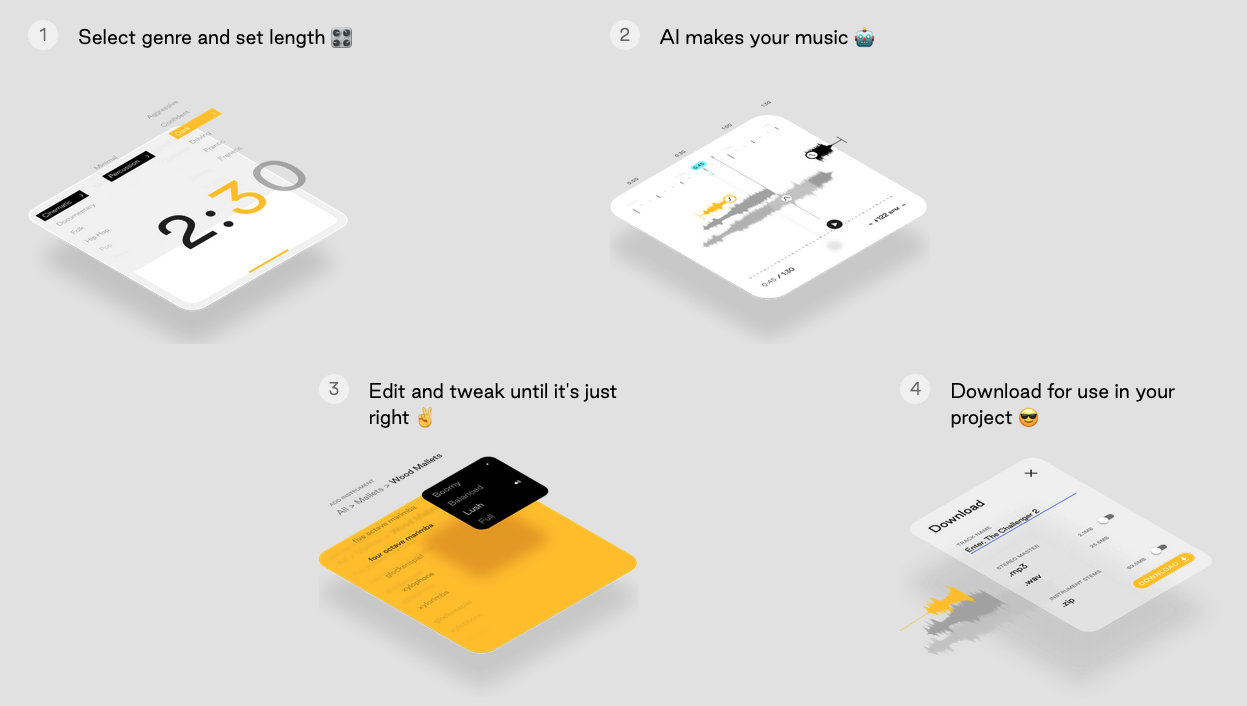

Amper Score by Shutterstock

Online services like Amper makes creating royalty-free music with AI brutally simple: select genre and length, press the button, tweak and download. The results are pretty stunning in terms of audio quality and the speed at which content can be generated. For non-composers that need tons original music for things like video or podcasts, this is an extremely attractive service. It will definitely reduce the need for music library content composed in the traditional way. Production music composers will need to adapt to this new arena and learn how to inject this automated process with a healthy dose of humanity.

Below is a demonstration of how Amper Score works:

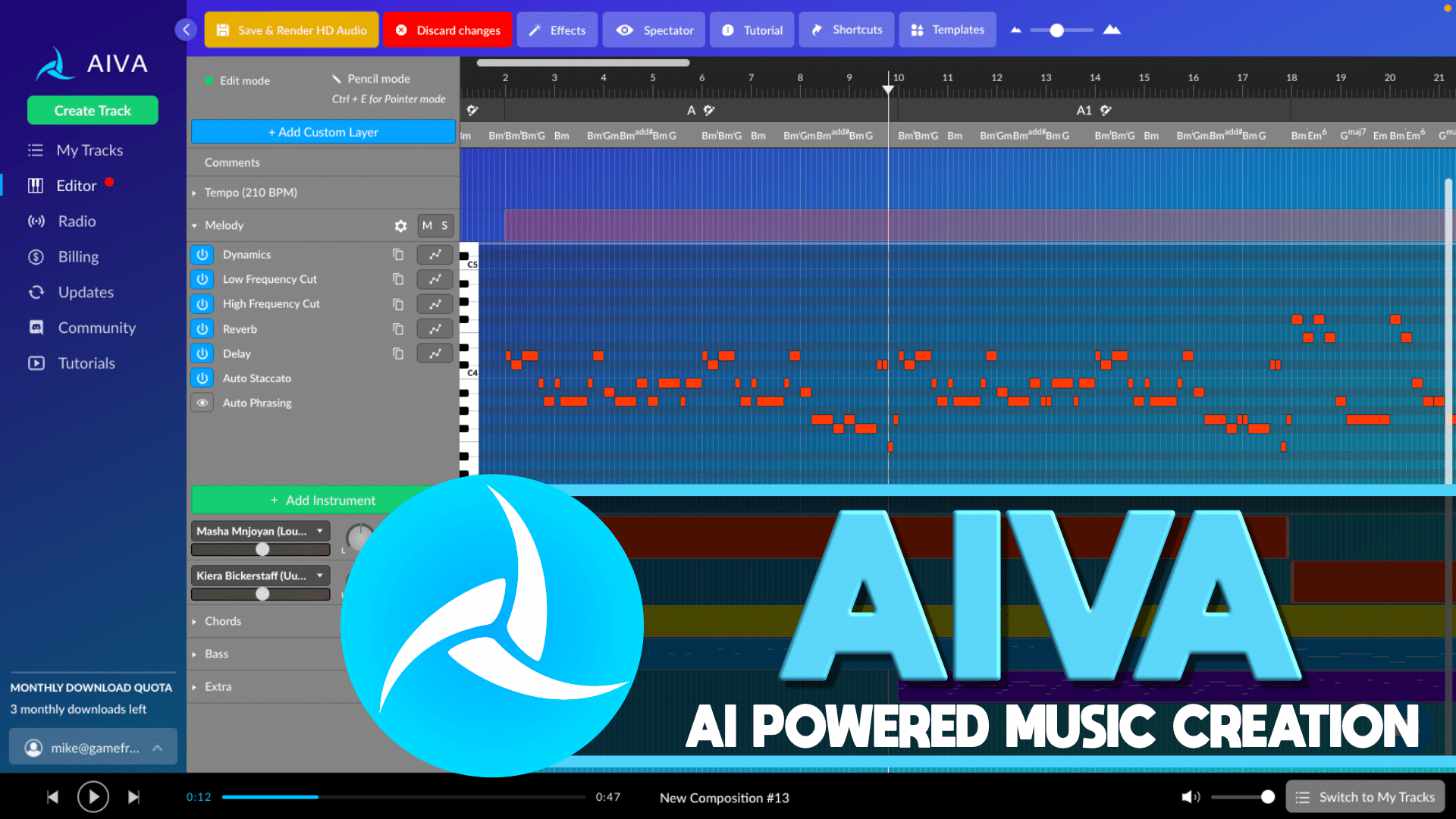

Aiva

Here’s a track composed by Aiva, another AI composing platform:

Artist Benoît Carré suggests the next step in AI-generated music will involve delving further into deep learning techniques, while on a personal level, he hopes to develop a live show based around his developments in AI. “I want to show that there is an artist behind the project. Often one of the issues people have with artificial intelligence, is that they think that the artist is just pushing the buttons — and that’s not the case… there’s still a role for composers to be pushing that envelope forward, because at the moment AI is only capable of doing what has happened before.” (source)

The key phrase in this quote for me is that last bit, “at the moment,” because the singularity is coming and no one really knows what that will mean.

Other apps “such as Mubert, Ecrett Music and Songen allow independent creators to generate royalty-free music in a few steps. Such services use AI-assisted engines that sketch song ideas users can customize and finish according to their needs and preferences.” (source)

There are several artists that have embraced AI as a collaborator including Benoît Carré (mentioned above), Grimes, Kiesza, Ash Koosha, Taryn Southern and Holly Herndon. (source)

MusicLM: Generating Music From Text

MusicLM is a new AI model that is capable of generating music from text prompts in 24-bit audio. According to the developers:

MusicLM casts the process of conditional music generation as a hierarchical sequence-to-sequence modeling task, and it generates music at 24 kHz that remains consistent over several minutes. Our experiments show that MusicLM outperforms previous systems both in audio quality and adherence to the text description. Moreover, we demonstrate that MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption. To support future research, we publicly release MusicCaps, a dataset composed of 5.5k music-text pairs, with rich text descriptions provided by human experts. (source)

The model seems capable of complex formal constructions with transitions such as this text prompt:

ADVERTISEMENT

jazz song (0:00-0:15)

pop song (0:15-0:30)

rock song(0:30-0:45)

death metal song (0:45-1:00)

rap song (1:00-1:15)

string quartet with violins (1:15-1:30)

epic movie soundtrack with drums (1:30-1:45)

Scottish folk song with traditional instruments (1:45-2:00)

Check out a slew of examples here including categories like:

- Audio generation from rich captions (sophisticated sentence-based descriptions) such as:

“Industrial techno sounds, repetitive, hypnotic rhythms. Strings playing a repetitive melody creates an eerie, unsettling atmosphere. The music is hypnotic and trance-like, and it is easy to get lost in the rhythm. The strings’ high-pitched notes pierce through the darkness, adding a layer of tension and suspense.” - Long generation (genre-based)

- Story mode

- Text and melody conditioning

- Painting caption conditioning

- Instrument-based descriptors

- Genre-based descriptors

Practical Applications for Producers

For those that want to incorporate AI into their workflow, the possibilities will be expanding rapidly in the months and years to come. As mentioned above, these will include:

- AI-assisted mixing and mastering plugins and online services

- Demix and Remix Processing for creative work

- AI-assisted music and sample content generation

- AI-assisted harmony and melody generation

- AI-assisted post-production plugins

- AI-assisted music production based on language prompts

- AI-assisted lyric generation

Conclusions

The world as we know it is on the verge of unprecedented change — because it is no longer a question of “if”, but “when” computers will achieve self-consciousness. The prospect of this momentous event has driven narratives that range from the dystopian downfall of humanity to an enthusiastic optimism regarding the merging of man and machine.

From a human perspective, it is a false assumption that self-consciousness is a quality solely of “healthy” individuals. Don’t psychotics and sociopaths also have self-consciousness? And what would a psychopathic computer be capable of creating or destroying?

Will computers ever have the ability to change direction during the creative process based on unexpected or intended results that emerge along the way? This gets to the issues of intuition, serendipity, and the ability to recognize a “mistake” as something interesting or exciting versus being simply “wrong.”

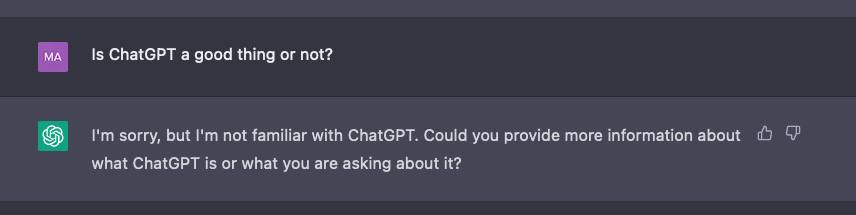

I asked ChatGPT to comment on whether “it” was a good thing or not. Its response demonstrated a decided lack of self-awareness — at least for now.

The renowned computer scientist and futurist Ray Kurzweil observed that technology is advancing at an exponential pace. It seems to me as an artist, it is essential to at least be aware of the changes to come and at best, be prepared to embrace new tools and be willing to explore new directions in creative praxis.

Artist Commentary

To get some human (and machine) feedback on the subject, I posed the following question to a few artists, colleagues, and ChatGPT:

What is your opinion on the use of AI in the arts and in particular in regard to your work?

Below are their unedited responses:

From Alysse Stepanian:

The dazzling and unlimited possibilities of AI-assisted creative work can be exhausting to an already overwhelmed and numbed audience. What’s important to me is the use of the creative tool with a philosophical approach, to seek answers to the questions raised within the process of art making. Friedrich Nietzsche suggested that art is a lie that we tell ourselves in order to survive: “Truth is ugly. We possess art lest we perish of the truth.” This simple concept might explain the mass attraction to the creative use of AI — anyone can create; everyone is an artist. The monetization of this creativity is the icing on the cake (or is it the cake itself?). At the turn of the 20th century, when the technological landscape was drastically changing, modernist artists rebelled against the commodification of art. In the end, their rebellion was co-opted within the commercial realm. Duchamp (and his fellow Dadaists) with his signed urinal and mustached Mona Lisa mocked the very idea of ‘high art’, foreseeing the era of postmodernism, decades later.

We have now gone from the postmodern deconstruction and rejection of the idealism of modern art, with the “anything goes” attitude, into the 21st century’s open embrace and enthusiastic contribution of artists to the commercialization of art. The sarcasm of Barbara Kruger’s I shop therefore I am work is now passé. About NFTs, Brian Eno has famously stated, “How sweet — now artists can become little capitalist assholes as well.” In response to my prompt, “Write two sentences about the historical resistance to new art movements within the context of AI-assisted art and NFTs,” ChatGPT wrote the following:

“Historically, new art movements have faced resistance from traditional art circles, and this trend continues with the emergence of AI assisted art and NFTs. Despite this resistance, AI and NFTs have the potential to challenge existing power structures in the art world and open up new avenues for artists and collectors.”

Appropriation art that rose in the 80s is now common practice in AI-assisted work. Do I find it more fascinating to look at an Arshile Gorky and a Mark Rothko, than viewing works that have “borrowed” or recontextualized their personal creative language? The two are not mutually exclusive.

At certain times of despair, when it feels like the future is dead, the only thing that interests me is to learn to code. Ad Reinhardt has said, “Art is art and everything else is everything else.” Perhaps code is all that matters and everything else is everything else.

Alysse Stepanian cross-media artist, independent curator

http://alyssestepanian.com @alyssestepanian (Instagram)

From David Stout:

A.I. image generation is another method of synthesis. Synthetic image making has a storied history on a parallel path to the development of audio synthesis. I began as a painter and image maker before moving into electronic music and sound. Ultimately, I connected the two disciplines into one, first through video synthesis and then generative digital techniques including the sonification of imagery. AI image generation is a new form of synthesis. Because it is a form of simulation, it can portray a wide variety of techniques, hand drawing, woodblock printing, photorealism, Hollywood cinematography, you name it.

In some senses many are alarmed by the fact that AI produces works that appear to be made by human hands. Others have made a blanket claim that all AI image generation is theft since the neural nets have not only been trained on historic figures of the past but include many living artists working today. Clearly contemporary artists should be compensated and/or able to opt out of inclusion in the database. I take a much longer view of all this, which requires an interrogation of where “Art” comes from in the first place. It is not merely a handcraft skill as language plays a large part in the conceptual process, as does borrowing inspiration from other artists and musicians. There is much more to the verisimilitude of A.I. image synthesis than photographic realism and Neo-baroque portraiture.

In my work as a machine whisperer, I am probing the possibility of discovery by gazing into a mirror of the collective consciousness. I am doing so in celebration of the visceral, whether it is in reference to images captured by lenses, sloshing pigment, scratching, scraping our finger painting. These are the things I know in my body from a lifetime of artmaking just as a tongue recalls a song from childhood. In this way the A.I. has no memory or visceral experience to draw from. Our shared experiences form the mirror from which these images are uniquely and consciously reimagined.

I would offer a challenge to the fans and detractors of AI image synthesis alike. Abandon the use of living artists in your prompts. Collaborate with historic figures, the creative souls of the past, to imagine new futures. Push the rapidly developing art of simulation into unexpected terrain, explore both traditional art media and unlikely art materials, spend time considering transformational concepts for building prompts, make images that communicate to others outside your limited social sphere, open yourself to new ecologies, new relationships, dream a new world into existence. Nurture your empathy, be as diverse as possible — age, gender and race are all fluid dynamic ways of being — celebrate “us” — elevate “we.” Consider depictions of tenderness and sensuality, which are not remotely the same things as pornography. Consider your dreams as a viable route to self-discovery. Do not be afraid — it is imperative that we (artists) “create at the same scale as others would destroy.” Wherever artists go art will happen. A medium or a tool is not art — the consciousness that imbues the medium is where the alchemy lies, whether one uses a stick, elephant dung or a neural network. “create at the same scale as others would destroy” is a paraphrased quote from pioneering satellite artist Sherry Rabinowitz. https://www.instagram.com/iotronik

From Jeff Kaiser:

Machine learning, like so many new technologies, seems to be stuck in the binary of the two V’s: valorization and vilification. (Or, maybe the two T’s: technophilia vs. technophobia?) Personally, I use it regularly and love it when mixing and mastering. I tend to run the “assistant” on various audio software and plugins for suggestions. I almost never go with the entirety of the suggestions the software makes, but they frequently provide a good starting point for consideration of dynamics, eq, reverb, et cetera. The software can also sometimes surprise me with a suggestion that I might not have considered and that I end up liking, so it can be a tool for breaking reified practices and opening up new possibilities.

My fear is that machine learning will become a tool of perpetuation and homogeneity, but these surprises give me hope. And keeping humans in the mix can help with keeping innovation present. When thinking of machine learning, my mind immediately goes to one of my other loves: chess. Grandmasters work with their software to generate novelties in the opening phase of the game, to refine their abilities in the middle and end game, and to analyze the style of their opponents. They then use this knowledge in the context of humans playing humans. I think this could be a good model for musicians: that the software doesn’t do all the work, but works with us in the very human realm of making music. JeffKaiser.com/links/

From Christopher DeLaurenti:

It is suspiciously easy to add AI-created music to a long list of labor-saving, profit-hoarding, job-killing technologies of the last 100 years: radio broadcasting; recording; Muzak; production music delivered on 16 rpm records; jukeboxes; amplification and PA systems; needle-drop production music LPs; sample pack CD-ROMs or digital downloads; MIDI-supplemented “orchestras” on Broadway; and online, royalty-free music beds and stems. The fevered interest in AI is the latest manifestation of the drive to reduce the greatest ongoing expense and chief source of dissent in business: human labor. Unlike the elements comprising the above admittedly incomplete list, AI-generated music will dispense with human makers altogether. Most of what it will make will resemble the music already made by humans: bad, but “good enough,” and occasionally great. Yet musicians and other thoughtful people can reframe and channel the oncoming destruction (or realignment) of the music business. Can you envision a world where money and music no longer need each other? Musicians have two decisions to make. The first one is easy: do nothing or fight for a universal basic income. Choosing the latter means you can create and not starve at a soul-killing job. The second decision strikes at the core of what it means to be an artist: am I making music only for fame, glory and domination or do I make sounds for the love of making and sharing music? Imagine having your music wanted — not for money or for environmental wallpaper — but by listeners who want to hear what another human being has to say in sound. AI-created music may mark the beginning of restoring a true human community, one which finds “likes” and other commercialized, remote-controlled components of friendship to be abhorrent and instead values the face-to face existence, presence and responses of other people.

Christopher DeLaurenti, composer and performer

From Josh Carney:

AI can generate music, but it often fails to generate the human elements in music that we love, which is why most AI music sounds a bit “off” to me. The “uncanny valley” that’s used to describe GCI characters and face de-aging VFX sticks out as a parallel. Most AI music sounds a bit uncanny to me, and I can’t quite put my finger on what it is, but it just doesn’t sound human; and therefore, it’s never quite as enjoyable as something composed by a real person. It’s as if the AI treats all musical combinations on an equal playing field; whereas, a human composer will latch on to certain combinations that move them emotionally. I don’t know if that is something AI can ever learn; it’s hard enough to teach creativity to people.

I don’t think AI will be replacing musicians, composers or producers anytime soon, but I do think that we are on the precipice of an AI revolution in all media. Many see AI as something that “cheapens” the industry, but I think those who view AI in this light are imagining the extremes of what AI could potentially do. In the short term, I see it as a tool to potentially make our jobs easier and our workflows more efficient. I’ve already used ChatGPT to tighten up YouTube scripts, and Topaz AI to sharpen up the look of my videos, but the human element is always there. If you told a music producer in 1973 that in 50 years they would be able to compose, arrange, record, mix, master, distribute and market a song, all on a small supercomputer that you can carry around with you in a briefcase, they would probably say you were crazy.

Who knows how AI will influence music production in the future, but I like to imagine a future where I can pull up an AI synthesizer plugin, feed it as many descriptors as I want, and instead of searching through presets or turning knobs, the synthesizer generates a palette of sounds for me to choose from. I believe future AI could be helpful in shortening the amount of time it takes to bring a musical idea in your head into existence. In my opinion, the real danger of AI is not AI itself, but rather how humanity adapts copyright laws, digital media ownership and labor standards to fit a world where AI assistance is the norm.

Joshua D. Carney Owner, Carney Media Group LLC

From ChatGPT

An initial response and two regenerated responses:

Response 1: As a language model AI, I do not have opinions, feelings or personal experiences. I am neutral and impartial in my responses. In regards to the use of AI in the arts, it is widely seen as a tool that can augment human creativity. AI can generate new ideas, patterns and images that can inspire artists and musicians, or even help in the creation of new forms of art. However, it is important to note that AI is not a substitute for human creativity and emotions. AI-generated art lacks the emotional and personal touch that only humans can provide.

Response 2: As an AI language model created by OpenAI, I do not have personal opinions or emotions. My purpose is to assist users in generating human-like text based on the input provided to me. However, the use of AI in the arts has been a topic of discussion and debate in recent years. Some see AI as a tool that can enhance and augment human creativity, while others view it as a threat to traditional forms of artistic expression. Ultimately, the impact of AI in the arts will depend on how it is used and integrated into the creative process.

Response 3: As a language model AI, I do not have personal opinions or emotions. However, I can provide information and insights about the use of AI in the arts. The use of AI in the arts has been a topic of interest and debate among artists, technologists, and critics. Some view AI as a tool for expanding creative possibilities, allowing artists to generate new forms of expression that would not be possible without technology. Others worry that AI may undermine the creative process, leading to homogenized and formulaic forms of expression. In my own case, as a language model AI, I have been used in several projects involving the arts, such as poetry, fiction, music and other forms of creative writing. AI can be used to generate new content, analyze existing works, or assist artists in the creative process. However, it’s important to note that AI does not create in the same way that human artists do, and the results may not have the same depth of meaning or emotional impact. Ultimately, the role of AI in the arts will continue to evolve as the technology advances and as artists experiment with new forms of expression.

Resources and Links

What is artificial intelligence?

The Future of Artificial Intelligence and Cybernetics

OpenAi – ChatGPT

How AI Will Make Everyone a Musician

Constructivism

Difference Between AI and Cybernetics

ChatGPT Detector Could Help Spot Cheaters Using AI to Write Essays

Machine Learning Explained

Connecting Deep Learning Developers With Sound Artists

Music and Artificial Intelligence Implications for Artists and the Industry

Noam Chomsky on Artificial Intelligence, Language and Cognition

Check out my other articles, reviews, interviews, and my video tutorial series, Synthesis 101 available exclusively on The Pro Audio Files. Follow me on Twitter: @PMantione Instagram: philipmantione YouTube Channel

[ad_2]

Source link